There are three main properties that make Iago a good fit for Twitter:

- High performance: In order to reach the highest levels of performance, your load generator must be equally performant. It must generate traffic in a very precise and predictable way to minimize variance between test runs and allow comparisons to be made between development iterations. Additionally, testing systems to failure is an important part of capacity planning, and it requires you to generate load significantly in excess of expected production traffic.

- Multi-protocol: Modelling a system as complex as Twitter can be difficult, but it’s made easier by decomposing it into component services. Once decomposed, each piece can be tested in isolation; this requires your load generator to speak each service’s protocol. Twitter has in excess of 100 such services, and Iago can and has tested most of them due to its built-in support for the protocols we use, including HTTP, Thrift and several others.

- Extensible: Iago is designed first and foremost for engineers. It assumes that the person building the system will also be interested in validating its performance and will know best how to do so. As such, it’s designed from the ground up to be extensible – making it easy to generate new traffic types, over new protocols and with individualized traffic sources. It is also provides sensible defaults for common use cases, while allowing for extensive configuration without writing code if that’s your preference.

Iago is the load generator we always wished we had. Now that we’ve built it, we want to share it with others who might need it to solve similar problems. Iago is now open sourced at GitHub under the Apache Public License 2.0 and we are happy to accept any feedback (or pull requests) the open source community might have.

How does Iago work?

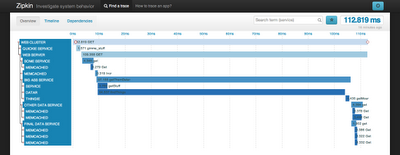

Iago’s documentation goes into more detail, but it is written in Scala and is designed to be extended by anyone writing code for the JVM platform. Non-blocking requests are generated at a specified rate, using an underlying, configurable statistical distribution (the default is to model a Poisson Process). The request rate can be varied as appropriate – for instance to warm up caches before handling full production load. In general the focus is on the arrival rate aspect of Little’s Law, instead of concurrent users, which is allowed to float as appropriate given service latency. This greatly enhances the ability to compare multiple test runs and protects against service regressions inducing load generator slow down.

In short, Iago strives to model a system where requests arrive independently of your service’s ability to handle them. This is as opposed to load generators which model closed systems where users will patiently handle whatever latency you give them. This distinction allows us to closely mimic failure modes that we would encounter in production.

Part of achieving high performance is the ability to scale horizontally. Unsurprisingly, Iago is no different from the systems we test with it. A single instance of Iago is composed of cooperating processes that can generate ~10K RPS provided a number of requirements are met including factors such as size of payload, the response time of the system under test, the number of ephemeral sockets available, and the rate you can actually generate messages your protocol requires. Despite this complexity, with horizontal scaling Iago is used to routinely test systems at Twitter with well over 200K RPS. We do this internally using our Apache Mesos grid computing infrastructure, but Iago can adapt to any system that supports creating multiple JVM processes that can discover each other using Apache Zookeeper.

Iago at Twitter

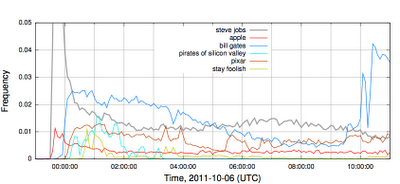

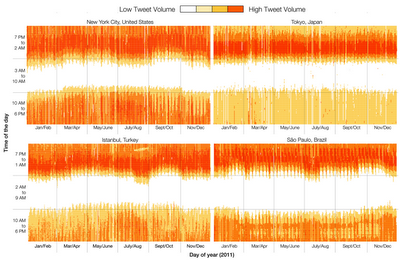

Iago has been used at Twitter throughout our stack, from our core database interfaces, storage sub-systems and domain logic, up to the systems accepting front end web requests. We routinely evaluate new hardware with it, have extended it to support correctness testing at scale and use it to test highly specific endpoints such as the new tailored trends, personalized search, and Discovery releases. We’ve used it to model anticipated load for large events as well as the overall growth of our system over time. It’s also good for providing background traffic while other tests are running, simply to provide the correct mix of usage that we will encounter in production.

Acknowledgements & Future Work

Iago was primarily authored by James Waldrop (@hivetheory), but as with any such engineering effort a large number of people have contributed. A special thanks go out to the Finagle team, Marius Eriksen (@marius), Arya Asemanfar (@a_a), Evan Meagher (@evanm), Trisha Quan (@trisha) and Stephan Zuercher (@zuercher) for being tireless consumers as well as contributors to the project. Furthermore, we’d like to thank Raffi Krikorian (@raffi) and Dave Loftesness (@dloft) for originally envisioning and spearheading the effort to create Iago.

To view the Iago source code and participate in the creation and development of our roadmap, please visit Iago on GitHub. If you have any further questions, we suggest joining the mailing list and following @iagoloadgen. If you’re at the Velocity Conference this week in San Francisco, please swing by our office hours to learn more about Iago.

- Chris Aniszczyk, Manager of Open Source (@cra)